This is a quickstart guide to using the compute clusters on HPC2N. It is assumed you have accounts at SUPR and HPC2N.

- If you need an overview of what a computer cluster is, read our Beginner’s intro to clusters

- If you would like a short Linux intro, read our Linux guide and Cheat Sheet

- If you need accounts at SUPR or at HPC2N, please read our page about getting User Accounts

The characters $ and b-an01 [~]$ represents the prompt and should not be included in the commands you type.

Logging in to ‘Kebnekaise’¶

Access to our systems is possible by using SSH. You will need an SSH client for this. There are several options, depending on your operating system. In many cases using ThinLinc will be the easiest, and this is what we recommend if you do not already have a favourite SSH client.

Password¶

You will be asked for the temporary password you got from the link in your welcome mail (or else you can get it here).

If this is the first time you are using any of the HPC2N facilities, please change your temporary password immediately after you have logged in.

This is done with the command “passwd” run in a terminal. You will first be asked for your current password (the temporary one you got from the above link) and then for your new password. You will then have to repeat the new password.

ThinLinc¶

ThinLinc is a cross-platform remote desktop server developed by Cendio AB. You can access Kebnekaise through ThinLinc. It is especially useful if you need to use software with a graphical interface, but it is also usually faster. You can access Kebnekaise with ThinLinc through either the Web Access desktop or the standalone application.

Web Access desktop¶

On your local web browser, go to https://kebnekaise-tl.hpc2n.umu.se:300/, this will display the login box

Use your HPC2N username and password to login.

The Web Access version is good if you are using a tablet or do not want to install software on your computer, but there are some features that are not supported.

Standalone application¶

The full capabilities of ThinLinc can be obtained with the standalone version. In this case you need to download and install ThinLinc. You can get the client from: https://www.cendio.com/thinlinc/download.

Start up the ThinLinc client and login with your HPC2N username and password to the server kebnekaise-tl.hpc2n.umu.se.

For more information on using ThinLinc with HPC2N’s systems, see our ThinLinc connection guide.

Linux and macOS¶

An SSH client is included and you can just open a terminal and then connect. This is how someone with username USERNAME would connect:

You will be asked for the temporary password you got from the link in your welcome mail ( or else you can get it here).

If you need a graphical user interface for anything, we recommend ThinLinc, as it is faster. However, it is also possible to connect with:

Note that if you are using macOS, depending on the version, you may have to install Quartz first.

For more information on connecting with Linux, see our Linux connection guide.

For more information on connecting from macOS, see our macOS connection guide.

Windows¶

An SSH client is not included, so you will need to install one. There are several stand-alone clients, for instance:

though we strongly recommend you use ThinLinc. If you need to use a graphical interface, it will also be easier to use ThinLinc, as you would otherwise have to also install an X11 server, like Xming.

For more information on connecting from Windows, see our Windows connection guide.

Storing your files¶

As default, you have 25GB in your home directory. If you need more, you can accept the “default storage” you will be offered after applying for compute resources. The default storage is 500GB. Another option is applying for an actual storage project.

Because of the small size of your home directory, we strongly recommend that you acquire and use project storage for your files and data.

You can read more about this in the section about the different filesystems at HPC2N.

Editors¶

Some of the installed editors are more suited for a GUI environment and some are more suited for a command line environment. If you are using the command line and do not know any of the installed editors, then it is recommended that you use “nano”.

Simple commands for nano¶

- Starting “nano”: Type nano FILENAME on the command line and press Enter. FILENAME is whatever you want to call your file.

- If FILENAME is a file that already exists, nano will open the file. If it dows not exist, it will be created.

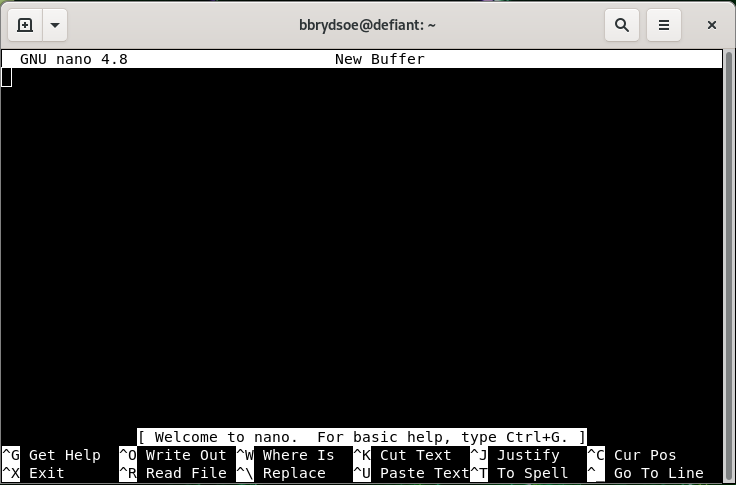

- The editor will look like this:

- Note that many of the commands are listed at the bottom

- The ^ before the letter-commands means you should press CTRL and then the letter (while keeping CTRL down).

- Your prompt is in the editor window itself, and you can just type (or copy and paste) the content you want in your file.

- When you want to exit (and possibly save), you press CTRL and then x while holding CTRL down (this is written CTRL-x or ^x).

- nano will ask you if you want to save the content of the buffer to the file. After that it will exit.

More information about installed editors at HPC2N’s systems can be found in the section “Editors” in the Linux guide.

Accessing software applications (the module system)¶

HPC2N are using a set of tools called EasyBuild and Lmod to install and manage software packages and their accompanying modules.

Modules are

- used to setup your environment (paths to executables, libraries, etc.) for using a particular (set of) software package(s)

- a tool to help users manage their Unix/Linux shell environment, allowing groups of related environment-variable settings to be made or removed dynamically

- a way to allow having multiple versions of a program or package available by just loading the proper module

- installed in a hierarchial layout. This means that some modules are only available after loading a specific compiler and/or MPI version.

Important commands

- ml lists the currently loaded modules, and is equivalent with module list

- ml spider lists all software modules. Same as module spider

- ml spider SOFTWARE searches (case-insensitive) for SOFTWARE in the whole module hierarchy (same as module spider SOFTWARE)

- ml spider SOFTWARE/VERSION shows information about the module SOFTWARE/VERSION including prerequisite modules

- ml SOFTWARE/VERSION loads the SOFTWARE/VERSION module, and is equivalent with module load SOFTWARE/VERSION

- ml -SOFTWARE unloads the currently loaded SOFTWARE module, and is equivalent with module unload SOFTWARE

- ml av prints the currently available modules (with what is currently loaded), and is equivalent with module avail

- ml purge Unload all modules except the “sticky” modules (snicenvironment and systemdefault modules which defines some important environment variables). Equivalent to module purge

Example¶

Gromacs

Find available versions

Check how to load a specific version (here GROMACS/2019.4-PLUMED-2.5.4)

Load the CUDA-enabled version of the above (from the previous command you learn that the prerequisites are GCC/8.3.0 CUDA/10.1.243 OpenMPI/3.1.4)

For more information about the module system, see the section “The module system”.

Compiling and linking with libraries¶

Both Intel and GCC compiler suites are installed on Kebnekaise, and there are C, C++, Fortran 77, Fortran 90, and Fortran 95 compilers available for both.

Information can be found in the section about Compiling.

The Batch System (SLURM)¶

A set of computing tasks submitted to a batch system is called a job. Jobs can be submitted in two ways: a) from a command line or b) using a job script. We recommend using a job script as it makes troubleshooting easier and also allows you to keep track of batch system parameters you used in the past.

Large, long, and/or parallel jobs must be run through the batch system.

The batch system on Kebnekaise is, like on most Swedish HPC centres, handled through SLURM.

SLURM is an Open Source job scheduler, which provides three key functions:

- Keeping track of available system resources

- Enforcing local syste, resource usage and job scheduling policies

- Managing a job queue and distributing work across resources according to policies

To run a batch job, you need to:

- create a SLURM batch script

- submit it

SLURM batch scripts can also be called batch submit files, SLURM submit files, or job scripts.

To create a new batch script:

- Open a new file in your chosen editor (nano, emacs, vim, …)

- Write a batch script including SLURM batch system directives

- Remember to load any modules you may need

- Save the file

- Submit the batch script to the batch system queue using the command sbatch

Batch system directives¶

Batch system directives start with #SBATCH. The very first line (#!/bin/bash) says that the Linux shell bash will be used to interpret the job script. Here are some of the most common directives.

- -A specifies the local/NAISS project ID formated as hpc2nXXXX-YYY or NAISSXXXX-YY-ZZ (mandatory; spaces and slashes are not allowed in the project ID. The letters can be upper or lower case though)

- -N the number of nodes that slurm should allocate for your job. This should only be used together with

–ntasks-per-nodeor with–exclusive. But in almost every case it is better to let slurm calculate the number of nodes required for your job, from the number of tasks, the number of cores per task, and the number of tasks per node. - -J is a job name (default is the name of the submit file)

- –output=OUTPUTFILE and –error=ERRORFILE specify paths to the standard output file (OUTPUTFILE) and standard error files (ERRORFILE), named as you see fit. The default is that both standard out and error are combined into the file

slurm-JOBID.out, where JOBID is the job-id of the job. If you name your output files or error files it is a good idea to add the variable%J(contains the current job-id) as it will ensure your previous files do not get overwritten. - -n specifies requested number of tasks. The default is one task.

- –time=HHH:MM:SS is the real time (as opposed to the processor time) that should be reserved for the job. Max is 168 hours. HHH is hours, MM is minutes, and SS is seconds.

- -c specifies requested number of cpus (actually cores) per task. Request that ncpus be allocated per task. This can be useful if the job is multi-threaded and requires more than one core per task for optimal performance. It can also be used to get more memory than is available on one core. The default is one core per task.

- –exclusive requests the whole node exclusively for this job

- –gres=gpu:CARDTYPE:x where CARDTYPE is k80, v100, or a100 and x is 1, 2, or 4 (4 only for K80 cards). This is used to request GPU resources. In case of A100 cards you also need to add -p amd_gpu.

- By default your job’s working directory is the directory you start the job in

- Before running the program it is necessary to load the appropriate module(s) for various software or libraries

- Generally, you should run your parallel program with srun unless the program handles it itself

Batch system commands¶

In the examples below, the SLURM batch script will be called JOBSCRIPT. You can call it anything you like. Traditionally, they have the extension .sh or .batch, but it is optional.

We refer to the job-id several times below. It is a unique id given to the job when it is submitted. It will be returned when the job is submitted and can also be found with the command squeue (more information below).

Submit job

Get a list of your jobs

USERNAME should be changed to your username. This list will contain the job-ids as well.

Check on a specific job with job-id JOBID

Delete a specific job with job-id JOBID

Delete all the jobs belonging to the user with username USERNAME

Note: you can only delete your own jobs.

Get a url with graphical info about a job with job-id JOBID

Batch system miscellaneous info¶

- Output and errors for a job with job-id JOBID will per default be sent to a file named

slurm-JOBID.out - To split output and error files use these flags in the submit script (and name ERRORFILE and OUTPUTFILE as you want - it is recommended to include the variable

%Jin the name, as that will ensure new files are created each run instead of overwriting the existing files) - To run on the largemem nodes (if you have access to this resource) add this to your submit script

- To constrain the nodes you run on to Intel Broadwell or Intel Skylake CPUs, add the appropriate one of these

- Using the GPU nodes on Kebnekaise

where CARDTYPE is k80, v100, or a100 and x = 1, 2, or 4 (4 only for the K80 type).

NOTE: for A100 you also need to add the partition#SBATCH -p amd_gpu

More information about batch scripts and the batch system can be found in the Batch System section.

Example job scripts¶

Change hpc2nXXXX-yyy to your actual project account number.